Attention is all you need (Transformer) - Model explanation (including math), Inference and Training

Top 5 Generative AI Papers (must read)Подробнее

Visualizing transformers and attention | Talk for TNG Big Tech Day '24Подробнее

Transformers From Scratch - Part 1 | Positional Encoding, Attention, Layer NormalizationПодробнее

Do we need Attention? A Mamba PrimerПодробнее

What are Transformer Models and how do they work?Подробнее

LLM Mastery in 30 Days: Day 3 - The Math Behind Transformers ArchitectureПодробнее

Attention in transformers, visually explained | DL6Подробнее

Transformer model explanation - Attention is all you need paperПодробнее

Vision Transformer BasicsПодробнее

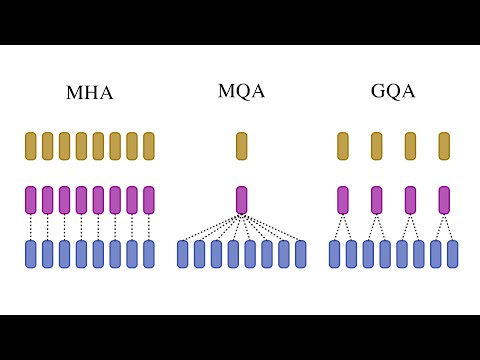

Variants of Multi-head attention: Multi-query (MQA) and Grouped-query attention (GQA)Подробнее

TensorFlow Transformer model from Scratch (Attention is all you need)Подробнее

The math behind Attention: Keys, Queries, and Values matricesПодробнее

Coding LLaMA 2 from scratch in PyTorch - KV Cache, Grouped Query Attention, Rotary PE, RMSNormПодробнее

[ 100k Special ] Transformers: Zero to HeroПодробнее

![[ 100k Special ] Transformers: Zero to Hero](https://img.youtube.com/vi/rPFkX5fJdRY/0.jpg)

BERT explained: Training, Inference, BERT vs GPT/LLamA, Fine tuning, [CLS] tokenПодробнее

![BERT explained: Training, Inference, BERT vs GPT/LLamA, Fine tuning, [CLS] token](https://img.youtube.com/vi/90mGPxR2GgY/0.jpg)

How LLM transformers work with matrix math and code - made easy!Подробнее

LLaMA explained: KV-Cache, Rotary Positional Embedding, RMS Norm, Grouped Query Attention, SwiGLUПодробнее

Decoder-Only Transformers, ChatGPTs specific Transformer, Clearly Explained!!!Подробнее

Attention is all you Need! [Explained] part-2Подробнее

![Attention is all you Need! [Explained] part-2](https://img.youtube.com/vi/LvJeLbb2WQQ/0.jpg)

Neural Attention - This simple example will change how you think about itПодробнее