Attention is all you Need! [Explained] part-2

Understanding Transformers & Attention: How ChatGPT Really Works! part2Подробнее

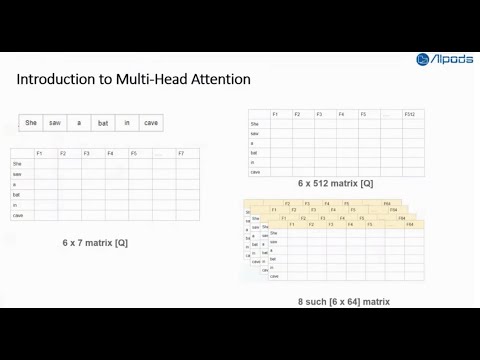

Lecture 18: Multi Head Attention Part 2 - Entire mathematics explainedПодробнее

The Transformer Model Explained, Part 2: A Closer Look at AI's Core Concepts and ProcessesПодробнее

Attention Is All You Need - Part 2: Introduction to Multi-Head & decoding the mathematics behind.Подробнее

LLMs aren't all you Need - Part 2 Getting Data into Retrieval-Augmented Generation (RAG)Подробнее

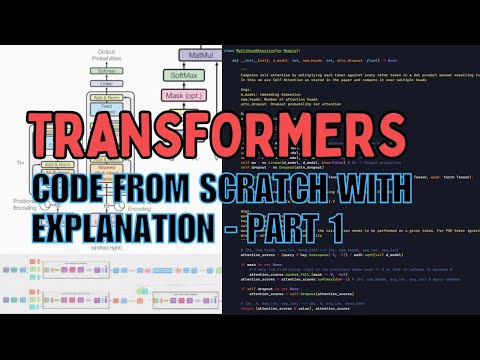

Encoder in transformers Code- part 2Подробнее

AI Reading List (by Ilya Sutskever) - Part 3Подробнее

Multi-Head Attention Mechanism and Positional Encodings in Transformers Explained | LLMs | GenAIПодробнее

The Transformer Model Explained, Part 2: A Closer Look at AI's Core Concepts and Processes (LD)Подробнее

Attention in transformers, visually explained | DL6Подробнее

Mechanistic Interpretability of LLMs Part 2 - Arxiv Dives with Oxen.aiПодробнее

Introduction to Generative AI - Part 2. Transformers, explained : Understanding the model behind GPTПодробнее

Transformers From Scratch - Part 1 | Positional Encoding, Attention, Layer NormalizationПодробнее

Introduction to Transformers | Transformers Part 1Подробнее

Complete Course NLP Advanced - Part 2 | Transformers, LLMs, GenAI ProjectsПодробнее

Live -Transformers Architecture Understanding indepth - Attention Is All You Need Part 2Подробнее

18. Transformers Explained Easily: Part 2 - Generative Music AIПодробнее

GPT (nanoGPT) from a beginner’s perspective (Part 2 Final)Подробнее

LLM Jargons Explained: Part 2 - Multi Query & Group Query AttentПодробнее

Cambly Live – Part 2: Collocations for everyday EnglishПодробнее