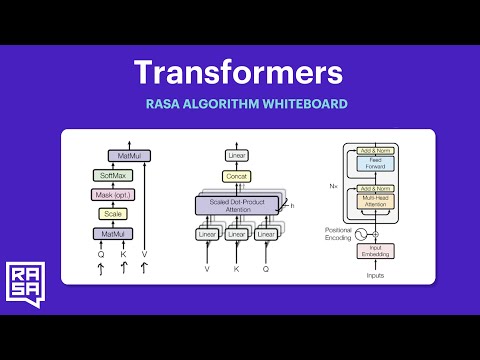

Rasa Algorithm Whiteboard - Transformers & Attention 3: Multi Head Attention

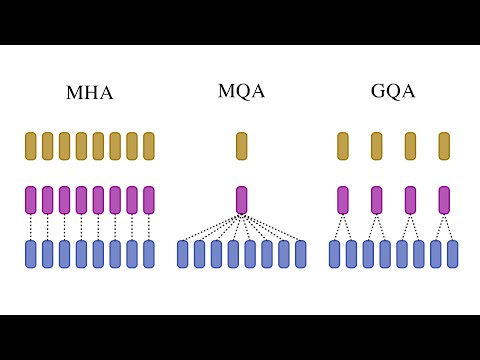

Variants of Multi-head attention: Multi-query (MQA) and Grouped-query attention (GQA)Подробнее

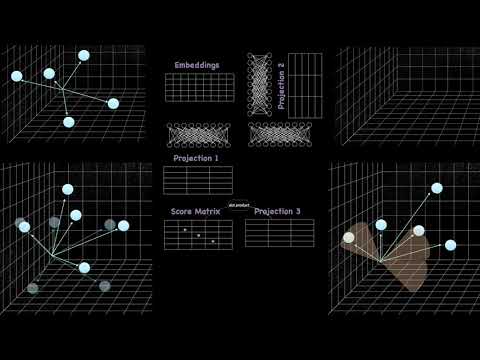

Self-Attention Equations - Math + IllustrationsПодробнее

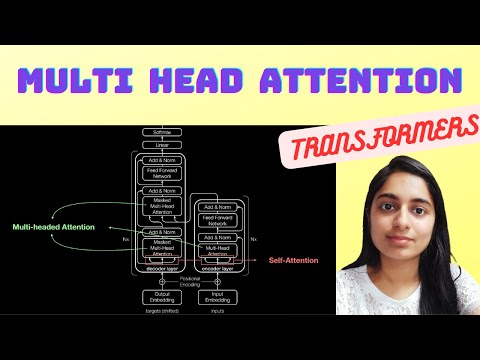

Multi Head Attention in Transformer Neural Networks with Code!Подробнее

Multi Head Attention in Transformer Neural Networks | Attention is all you need (Transformer)Подробнее

Visualize the Transformers Multi-Head Attention in ActionПодробнее

The OG transformer: Attention Is All You NeedПодробнее

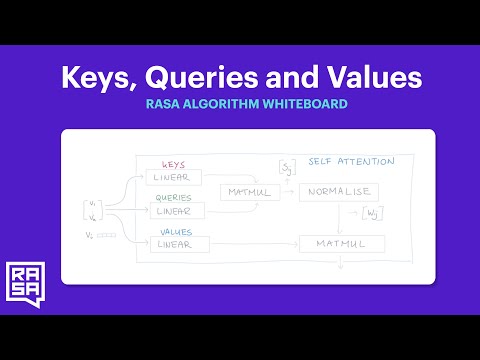

Rasa Algorithm Whiteboard - Transformers & Attention 2: Keys, Values, QueriesПодробнее

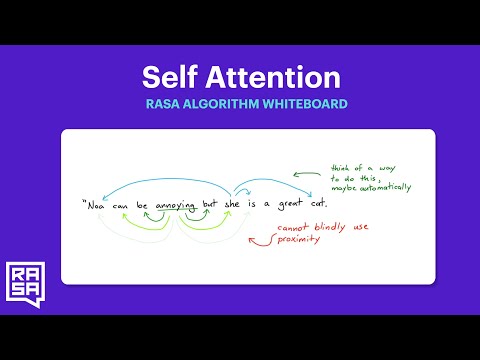

Rasa Algorithm Whiteboard - Transformers & Attention 1: Self AttentionПодробнее

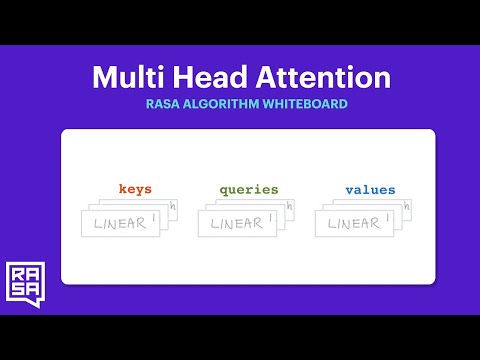

Rasa Algorithm Whiteboard - Transformers & Attention 3: Multi Head AttentionПодробнее

Rasa Algorithm Whiteboard: Transformers & Attention 4 - TransformersПодробнее