When to use aggregateByKey RDD transf. in PySpark |PySpark 101|Part 14|DM|DataMaking| Data Making

Practical RDD transformation: coalesce using Jupyter |PySpark 101|Part 22| DM | DataMakingПодробнее

How to use cogroup RDD transformation in PySpark |PySpark 101|Part 17| DM | DataMaking | Data MakingПодробнее

Do not use groupByKey RDD transformation on large data set | PySpark 101 | Part 12 | DM | DataMakingПодробнее

Why reduceByKey RDD transf. is preferred instead of groupByKey |PySpark 101|Part 13| DM | DataMakingПодробнее

How to use sortByKey RDD transf. in PySpark |PySpark 101|Part 15| DM | DataMaking | Data MakingПодробнее

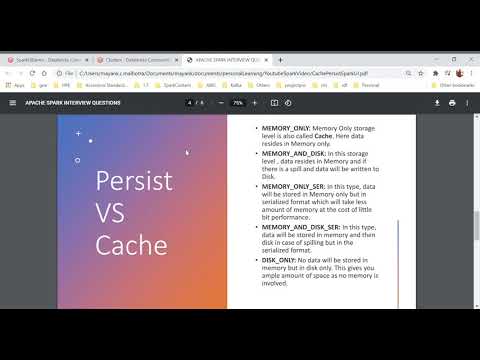

Cache VS Persist With Spark UI: Spark Interview QuestionsПодробнее

How to Use countByKey and countByValue on Spark RDDПодробнее

How to use mapPartitions RDD transformation in PySpark | PySpark 101 | Part 6 | DM | DataMakingПодробнее

Different ways to create an RDD - PySpark Interview QuestionПодробнее

23 - Create RDD using parallelize method - TheoryПодробнее

Learn Apache Spark in 10 Minutes | Step by Step GuideПодробнее