SGD and Weight Decay Secretly Compress Your Neural Network

What's Hidden in a Randomly Weighted Neural Network?Подробнее

AdamW Optimizer Explained #datascience #machinelearning #deeplearning #optimizationПодробнее

Optimization for Deep Learning (Momentum, RMSprop, AdaGrad, Adam)Подробнее

NN - 16 - L2 Regularization / Weight Decay (Theory + @PyTorch code)Подробнее

AdamW - L2 Regularization vs Weight DecayПодробнее

pytorch weight decayПодробнее

The Algorithm that Helps Machines LearnПодробнее

Neural Network Training: Effect of Weight DecayПодробнее

Regularization in a Neural Network | Dealing with overfittingПодробнее

The Unreasonable Effectiveness of Stochastic Gradient Descent (in 3 minutes)Подробнее

Optimizers - EXPLAINED!Подробнее

Stochastic Gradient Descent: where optimization meets machine learning- Rachel WardПодробнее

Optimizers in Deep Neural NetworksПодробнее

Adam Optimization Algorithm (C2W2L08)Подробнее

Top Optimizers for Neural NetworksПодробнее

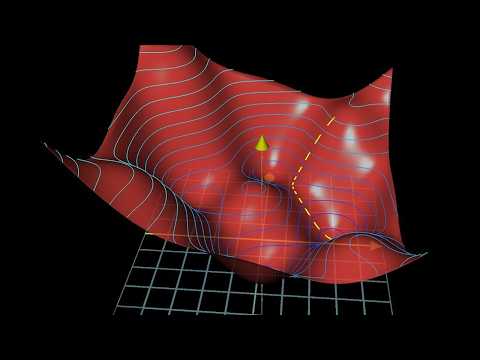

Gradient descent, how neural networks learn | DL2Подробнее

Backpropagation, step-by-step | DL3Подробнее