NEW: INFINI Attention w/ 1 Mio Context Length

GenAI Leave No Context Efficient Infini Context Transformers w Infini attentionПодробнее

Infini Attention - Infinite Attention Models?Подробнее

[2024 Best AI Paper] Leave No Context Behind: Efficient Infinite Context Transformers with Infini-atПодробнее

![[2024 Best AI Paper] Leave No Context Behind: Efficient Infinite Context Transformers with Infini-at](https://img.youtube.com/vi/fc7F0y9uh08/0.jpg)

Leave no context behind: Infini attention Efficient Infinite Context TransformersПодробнее

RING Attention explained: 1 Mio Context LengthПодробнее

Google's Infini-attention: Infinite Context in Language Models 🤯 #ai #googleai #nlpПодробнее

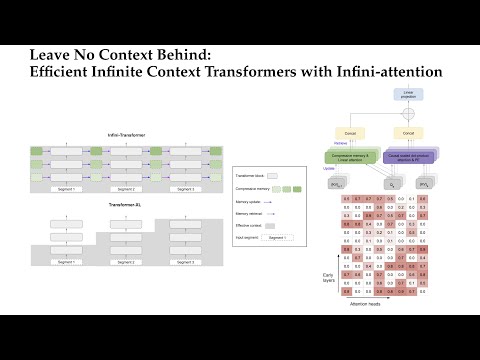

Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attentionПодробнее

Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attentionПодробнее

Did Google solve Infinite Context Windows in LLMs?Подробнее

AI Research Radar | GROUNDHOG | Efficient Infinite Context Transformers with Infini-attention | GOEXПодробнее

GitHub - mustafaaljadery/gemma-2B-10M: Gemma 2B with 10M context length using Infini-attention.Подробнее

Infini attention and Infini TransformerПодробнее

Ring Attention for Longer Context Length for LLMsПодробнее

Efficient Infinite Context Transformers with Infini-Attention (Paper Explained)Подробнее

Data Science TLDR 10- "Efficient infinite context transformers with infini-attention" (2024)Подробнее

Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attentionПодробнее

Infini-attention and AM-RADIOПодробнее

Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attentionПодробнее

Unlocking Infinite Context: Meet Infini attention for Transformers!Подробнее