Fine-Tuning Multimodal LLMs (LLAVA) for Image Data Parsing

LLaVA - This Open Source Model Can SEE Just like GPT-4-VПодробнее

LLaVA - the first instruction following multi-modal model (paper explained)Подробнее

Fine Tune Vision Model LlaVa on Custom DatasetПодробнее

Image Annotation with LLava & OllamaПодробнее

How To Fine-tune LLaVA Model (From Your Laptop!)Подробнее

Fine-tune Multi-modal LLaVA Vision and Language ModelsПодробнее

Fine Tune a Multimodal LLM "IDEFICS 9B" for Visual Question AnsweringПодробнее

How LLaVA works 🌋 A Multimodal Open Source LLM for image recognition and chat.Подробнее

Multimodal LLM: Microsoft's new KOSMOS-2.5 for Image TextПодробнее

New LLaVA AI explained: GPT-4 VISION's Little BrotherПодробнее

Convert Image to text for FREE! 🤯 How to get started?🚀 LLAVA Multimodal (Full Tutorial)Подробнее

LLava: Visual Instruction TuningПодробнее

Fine-tuning Large Language Models (LLMs) | w/ Example CodeПодробнее

Fine Tuning LLaVAПодробнее

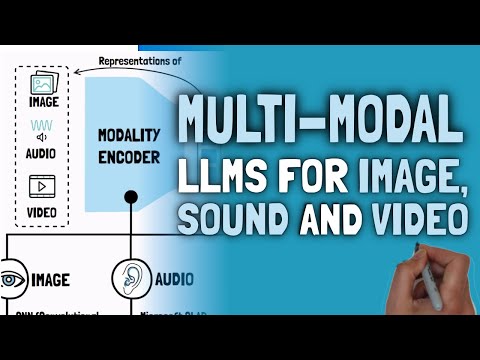

LLM Chronicles #6.3: Multi-Modal LLMs for Image, Sound and VideoПодробнее

LLaVA: A large multi-modal language modelПодробнее