Efficient Self-Attention for Transformers

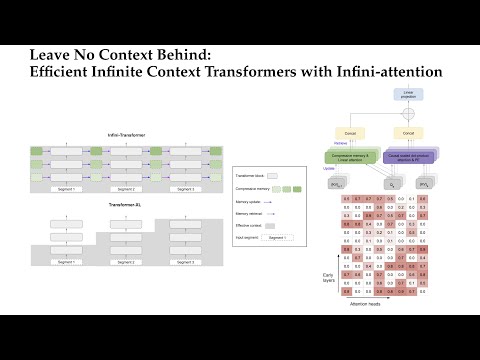

Leave no context behind: Infini attention Efficient Infinite Context TransformersПодробнее

An Effective Video Transformer With Synchronized Spatiotemporal and Spatial Self Attention for ActioПодробнее

SHViT (CVPR2024): Single-Head Vision Transformer with Memory Efficient Macro DesignПодробнее

RoPE Rotary Position Embedding to 100K context lengthПодробнее

Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attentionПодробнее

Exploring efficient alternatives to Transformer modelsПодробнее

Multi-criteria Token Fusion with One-step-ahead Attention for Efficient Vision (CVPR 2024)Подробнее

GenAI Leave No Context Efficient Infini Context Transformers w Infini attentionПодробнее

AI Research Radar | GROUNDHOG | Efficient Infinite Context Transformers with Infini-attention | GOEXПодробнее

Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attentionПодробнее

Attention is all you need explainedПодробнее

FasterViT: Fast Vision Transformers with Hierarchical AttentionПодробнее

ELI5 FlashAttention: Fast & Efficient Transformer Training - part 2Подробнее

EfficientViT: Memory Efficient Vision Transformer with Cascaded Group Attention (Eng)Подробнее

Separable Self and Mixed Attention Transformers for Efficient Object TrackingПодробнее

Vision Mamba BEATS Transformers!!!Подробнее

Self-Attention Using Scaled Dot-Product ApproachПодробнее

[CVPR 2023] EfficientViT: Memory Efficient Vision Transformer With Cascaded Group AttentionПодробнее

![[CVPR 2023] EfficientViT: Memory Efficient Vision Transformer With Cascaded Group Attention](https://img.youtube.com/vi/ZYATsJboyhM/0.jpg)

GTP-ViT: Efficient Vision Transformers via Graph-Based Token PropagationПодробнее