Create Spark DataFrame from CSV JSON Parquet | PySpark Tutorial for Beginners

PySpark Learning Series | 07-Create DataFrames from Parquet, JSON and CSVПодробнее

NEW: Learn Apache Spark with Python | PySpark Tutorial For Beginners FULL Course [2024]Подробнее

![NEW: Learn Apache Spark with Python | PySpark Tutorial For Beginners FULL Course [2024]](https://img.youtube.com/vi/T1bV4qxVNmM/0.jpg)

Microsoft Fabric: How to load data in Lakehouse using Spark; Python using the notebookПодробнее

Databricks | Pyspark: Read CSV File -How to upload CSV file in Databricks File SystemПодробнее

Convert CSV to Parquet using pySpark in Azure Synapse AnalyticsПодробнее

PySpark Tutorial for BeginnersПодробнее

How to read CSV, JSON, PARQUET into Spark DataFrame in Microsoft Fabric (Day 5 of 30)Подробнее

9. read json file in pyspark | read nested json file in pyspark | read multiline json fileПодробнее

Spark ETL with Files (CSV | JSON | Parquet | TXT | ORC)Подробнее

Writing Data from Files into Spark Data Frames using Databricks and PysparkПодробнее

33. Raw Json (Semistructured Data) to Table (Structured Data) | Very Basic | Azure DatabricksПодробнее

03. Upload Files Directly Into The Databricks File Store or DBFS | CSV | PARQUET | JSON | DELTA etc.Подробнее

31. Read JSON File in Databricks | Databricks Tutorial for Beginners | Azure DatabricksПодробнее

13. Write Dataframe to a Parquet File | Using PySparkПодробнее

11. Write Dataframe to CSV File | Using PySparkПодробнее

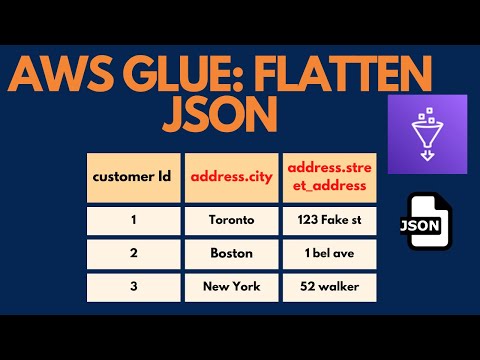

AWS Glue PySpark: Flatten Nested Schema (JSON)Подробнее

PySpark saveAsTable | Save Spark Dataframe as Parquet file and TableПодробнее

08. Combine Multiple Parquet Files into A Single Dataframe | PySpark | DatabricksПодробнее

Pyspark Scenarios 21 : Dynamically processing complex json file in pyspark #complexjson #databricksПодробнее