All You Need To Know About Running LLMs Locally

AI on Mac Made Easy: How to run LLMs locally with OLLAMA in Swift/SwiftUIПодробнее

RouteLLM - Route LLM Traffic Locally Between Ollama and Any ModelПодробнее

Ollama UI - Your NEW Go-To Local LLMПодробнее

Running Uncensored and Open Source LLMs on Your Local MachineПодробнее

host ALL your AI locallyПодробнее

Top 5 Projects for Running LLMs Locally on Your ComputerПодробнее

Run LLMs Locally in 5 MinutesПодробнее

Zero to Hero - Develop your first app with Local LLMs on Windows | BRK142Подробнее

FREE Local LLMs on Apple Silicon | FAST!Подробнее

LLMs aren't all you Need - Part 2 Getting Data into Retrieval-Augmented Generation (RAG)Подробнее

How To Easily Run & Use LLMs Locally - Ollama & LangChain IntegrationПодробнее

100+ Docker Concepts you Need to KnowПодробнее

Grok-1 is Open Source | All you need to know!!!Подробнее

Your Own Private AI-daho: Using custom Local LLMs from the privacy of your own computerПодробнее

"okay, but I want Llama 3 for my specific use case" - Here's howПодробнее

Run LLMs on Mobile Phones Offline Locally | No Android Dev Experience Needed [Beginner Friendly]Подробнее

![Run LLMs on Mobile Phones Offline Locally | No Android Dev Experience Needed [Beginner Friendly]](https://img.youtube.com/vi/hjCNCh4kfJ4/0.jpg)

List of Different Ways to Run LLMs LocallyПодробнее

Run your own AI (but private)Подробнее

Run ANY Open-Source LLM Locally (No-Code LMStudio Tutorial)Подробнее

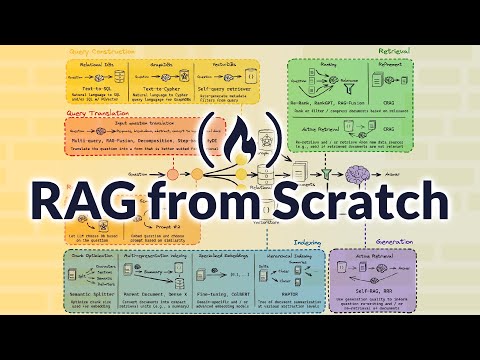

Learn RAG From Scratch – Python AI Tutorial from a LangChain EngineerПодробнее