9. read json file in pyspark | read nested json file in pyspark | read multiline json file

17. azure databricks interview questions | #databricks #pyspark #interview #azure #azuredatabricksПодробнее

Flatten Nested Json in PySparkПодробнее

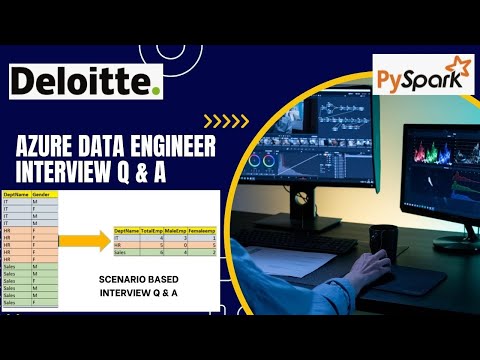

15. deloitte azure databricks interview questions | #databricks #pyspark #interview #azureПодробнее

16. deloitte azure databricks interview questions | #databricks #pyspark #interview #azureПодробнее

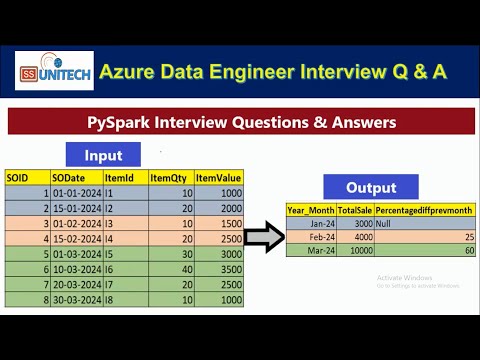

13. Pepsico pyspark interview question and answer | azure data engineer interview Q & A | databricksПодробнее

46. Date functions in PySpark | add_months(), date_add(), date_sub() functions #pspark #sparkПодробнее

44. Date functions in PySpark | current_date(), to_date(), date_format() functions #pspark #sparkПодробнее

45. Date functions in PySpark | datediff(), month_between(), trunc() functions #pspark #sparkПодробнее

14. Pepsico azure databricks interview question and answer | azure data engineer interview Q & AПодробнее

12. how partition works internally in PySpark | partition by pyspark interview q & a | #pysparkПодробнее

41. subtract vs exceptall in pyspark | subtract function in pyspark | exceptall function in pysparkПодробнее

6. what is data skew in pyspark | pyspark interview questions & answers | databricks interview q & aПодробнее

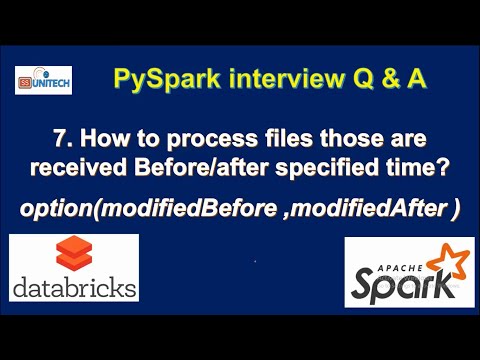

7. modifiedbefore & modifiedafter in reading in pyspark | pyspark interview questions & answersПодробнее

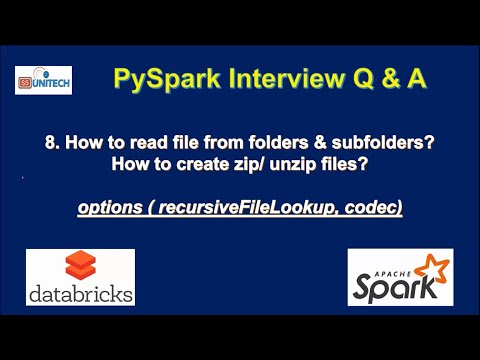

8. how to read files from subfolders in pyspark | how to create zip file in pysparkПодробнее

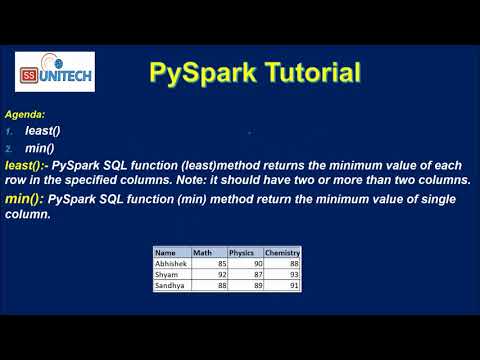

43. least in pyspark | min in pyspark | PySpark tutorial | #pyspark | #databricks | #ssunitechПодробнее

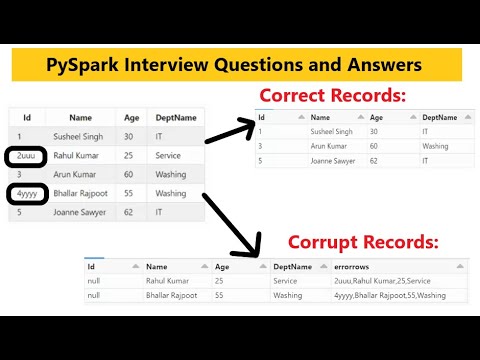

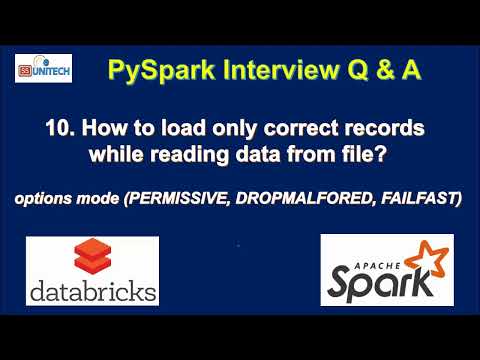

10. How to load only correct records in pyspark | How to Handle Bad Data in pyspark #pysparkПодробнее

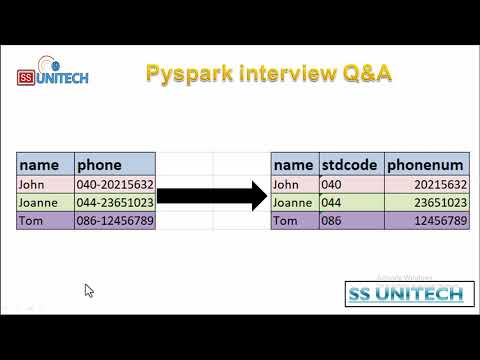

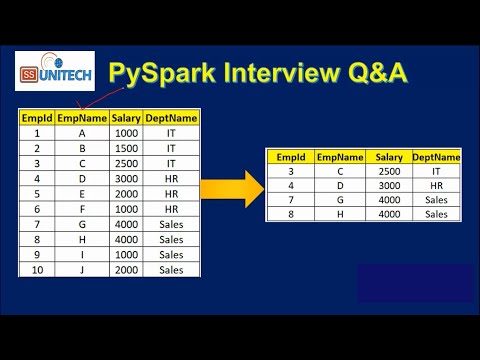

4. pyspark scenario based interview questions and answers | databricks interview question & answersПодробнее

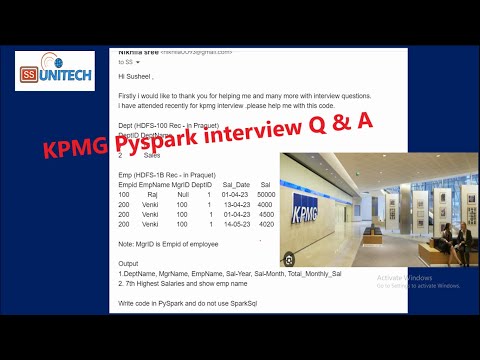

5. kpmg pyspark interview question & answer | databricks scenario based interview question & answerПодробнее

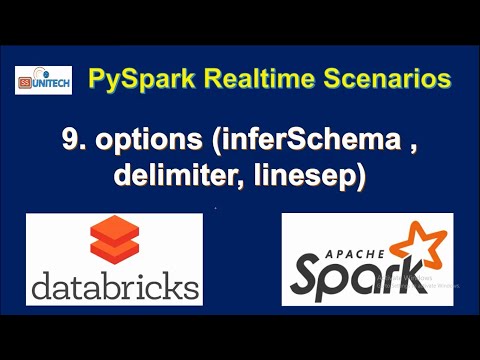

9. delimiter in pyspark | linesep in pyspark | inferSchema in pyspark | pyspark interview q & aПодробнее

11. How to handle corrupt records in pyspark | How to load Bad Data in error file pyspark | #pysparkПодробнее